This article is not legal advice. It’s a practical, human‑readable CC BY‑NC‑ND for ML explained guide to help researchers achieve AI dataset license compliance when working with noncommercial no‑derivatives AI datasets.

TL;DR (for researchers)

- Research‑only dataset use: Treat CC BY‑NC‑ND datasets as research only; do not use in any product or monetized service.

- Commercial use restrictions (NC): If the use is directed toward commercial advantage, it’s not allowed.

- No‑derivatives (ND): Avoid redistributing datasets vs mirrors, releasing modified data, or publishing releasing checkpoints / weights if those are considered derivatives.

- Keep a documented dataset license checklist and follow ML licensing best practices.

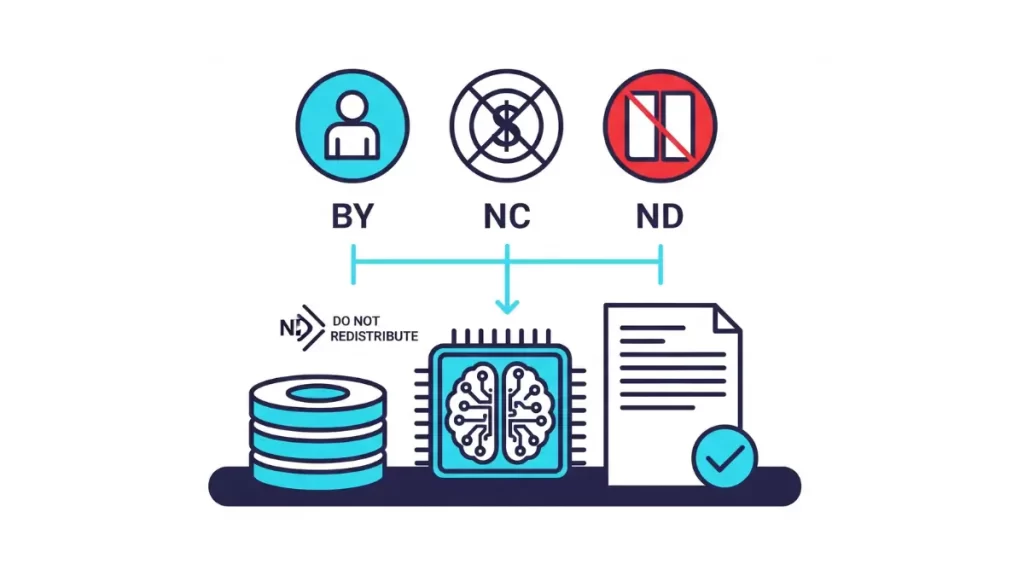

What CC BY‑NC‑ND means in machine learning

BY (Attribution): Provide clear attribution (BY) requirements—cite the dataset in papers, READMEs, and demos.

NC (NonCommercial): Commercial use restrictions (NC) block uses aimed at revenue or competitive advantage—ads, paid features, enterprise demos, etc.

ND (NoDerivatives): You may share the original files, but no‑derivatives (ND) and model weights means you generally cannot share adapted materials (including curated subsets or potentially trained weights) without permission.

In short, cc by‑nc‑nd machine learning usually allows private experiments and publishing numbers, but not distribution of altered data or weights.

Dataset license checklist (print this)

- Confirm the license text and any publisher FAQ (some clarify the status of trained weights).

- Document research‑only dataset use in your repo.

- Separate storage/workspaces for ND data and runs (mark as

(Research‑Only)). - Prohibit redistributing datasets vs mirrors unless explicitly allowed.

- Define a releasing checkpoints / weights policy—default to don’t release for ND.

- Run a legal risks for AI training data review before any public release.

- Keep attribution (BY) requirements templates ready for papers and demos.

Are trained weights derivatives under CC BY‑NC‑ND for LM?

This is a frequent grey area—are trained weights derivatives? Licensors vary. If the dataset FAQ doesn’t clearly allow releasing weights, assume weights are derivatives and do not publish without permission. Safer posture: keep weights internal, label them Research‑Only, and train commercial models on permissive data instead.

Redistributing datasets vs mirrors

Many ND datasets forbid redistribution even as 1:1 mirrors. Link to the original source instead. If redistribution is allowed, keep the archive unaltered and include full attribution. Never publish curated “improved” versions under ND unless you have explicit permission.

Releasing checkpoints / weights policy

Under ND, releasing checkpoints can be interpreted as distributing derivatives. If you must share for peer review, seek written permission, share hashes/metrics rather than weights, or provide scripts that reproduce results from the original dataset without shipping altered data.

Combining ND datasets with permissive data

Combining ND datasets with permissive data can taint the resulting model. Keep ND‑trained runs fully segregated; do not mix weights with permissively trained models you intend to release. Publish results (numbers) rather than artifacts if you need public proof.

CC BY‑NC‑ND for ML licensing best practices

- Maintain a central AI dataset license compliance doc for your lab/team.

- Tag repos and checkpoints with license metadata.

- Use access controls on ND data buckets.

- Prefer permissive datasets for anything public; reserve ND data for private ablations.

- Publish model/data cards capturing scope, restrictions, and commercial use restrictions (NC).

- Conduct periodic audits using the dataset license checklist above.

When to seek a separate license

Seek a bespoke license when you want to: (a) publish trained weights, (b) ship any feature powered by ND‑trained artifacts, or (c) redistribute modified data. Negotiating permission converts risky cc by‑nc‑nd machine learning scenarios into permitted use.

Typical ML activities — risk table

| Activity | Likely OK (with attribution) | Caution | Not OK |

|---|---|---|---|

| Private, internal research & experiments | ✅ | ||

| Publishing benchmarks/metrics based on the dataset | ✅ | ||

| Sharing unaltered dataset mirrors | ⚠️ Confirm license; many forbid redistribution | ||

| Sharing modified datasets or curated subsets | ⚠️ Often ND‑restricted | ❌ if ND applies | |

| Releasing trained weights based solely on ND data | ⚠️ Ambiguous; treat as high‑risk | ❌ if considered a derivative | |

| Using results/weights in a commercial product | ❌ (NC) | ||

| Posting edited images derived from the data | ⚠️ Depends on terms & privacy | ❌ if derivative redistribution |

Note: Some publishers clarify whether training is allowed and whether weights count as derivatives. Always read the dataset’s README/FAQ and abide by any explicit allowances or prohibitions.

FAQs

Can I use CC BY‑NC‑ND data to train a private model?

Yes, typically for research‑only dataset use with proper attribution. Do not deploy it in commercial settings.

Are weights derivatives under CC BY‑NC‑ND?

Often treated as derivatives unless the licensor says otherwise. When unclear, avoid publishing weights and keep them internal.

Is evaluation/benchmarking allowed with ND datasets?

Usually yes: publish metrics and analysis (not data or weights). This supports AI dataset license compliance without redistribution

Can I publish sample outputs from ND datasets?

In limited, academic contexts with attribution and low‑res/watermarked examples; avoid bulk/high‑res sets or anything resembling redistribution.

What counts as commercial use in ML?

If the model, weights, or outputs are used for monetized features, enterprise trials, paid APIs, or marketing advantage, that’s commercial.

Mixing ND and permissive datasets risks

Mixing can complicate licensing of downstream artifacts; keep ND runs separate and do not merge checkpoints you plan to release.

How to attribute datasets in research

Follow the dataset’s citation format, add a clear acknowledgment in READMEs, and include license notices in demo pages.

Safe workflows for ND datasets

Use private repos, limit access, avoid redistribution, watermark demos, and document the releasing checkpoints / weights policy.

When to seek a separate license

Seek permission before distributing trained weights or modified data, or if planning any commercial deployment.

CC BY‑NC‑ND vs CC BY‑SA for AI

CC BY‑SA allows derivatives if you share alike; CC BY‑NC‑ND forbids derivatives and commercial use, much stricter for ML.

Related articles

- How‑to guide: How to Use Pico‑Banana‑400K for Research (Step‑by‑Step)

- Main explainer: Pico-Banana-400K: Apple’s 400K Real-Image Editing Dataset

Resources & Further Reading

Official license & core docs

- CC BY-NC-ND 4.0 — Deed (human-readable). Highlights the key terms. Creative Commons

- CC BY-NC-ND 4.0 — Legal code (full text). The binding license. Creative Commons

- Creative Commons FAQ. Adaptations, mixing licenses, and common edge cases. Creative Commons

- NonCommercial interpretation (CC Wiki). What “primarily intended for commercial advantage” means. Creative Commons

- Recommended practices for attribution. How to meet the BY requirement. Creative Commons

- License versions overview. Why 4.0 is the current international version. Creative Commons

CC, AI & training guidance

- Understanding CC licenses & generative AI (CC). What CC licenses do—and don’t—cover for AI uses. Creative Commons

- Using CC-licensed works for AI training (CC). Step-by-step legal considerations across jurisdictions. Creative Commons

- “Should CC-licensed content be used to train AI? It depends.” (CC). Context on fair use (US) and EU TDM exceptions. Creative Commons

- U.S. Copyright Office—AI & Generative Training (Report, 2025). Current U.S. policy analysis and references. U.S. Copyright Office

Weights/derivatives discussion (for context)

- Are model weights protected/copyrightable? Overview of arguments & functional-facts critique. Legal Blogs

- The Turing Way—Licensing ML models. Notes the open question about weights and emerging model licenses. book.the-turing-way.org

- Legal commentary: memorization & distribution risks (Norton Rose Fulbright, 2025). Practical risk framing. Norton Rose Fulbright

- Academic review: memorization & copyright risks (OUP JIPLP, 2025). Evidence and open issues. OUP Academic

Alternatives & permissive options for data (when you need commercial-friendly terms)

- Open Data Commons licenses (ODbL, ODC-By, PDDL). Data-specific open licenses. opendatacommons.org

- ODbL summary/legal text. Attribution + Share-Alike for databases. opendatacommons.org+1

- CDLA-Permissive-2.0 (Linux Foundation). Short, permissive data license designed with AI/ML in mind. CDLA+2Linux Foundation+2

- Open-licenses primer (resources.data.gov). U.S. gov guidance on what counts as “open.” resources.data.gov

Model/dataset licensing in practice

- Hugging Face—Licenses on the Hub. How to declare licenses for models/datasets. Hugging Face

- Dataset Cards (HF). Where to document license + usage notes for datasets. Hugging Face

- OpenRAIL-M (BigScience / HF). AI-specific “open & responsible” licenses for models. Hugging Face+1

2 responses to “CC BY‑NC‑ND for ML: What You Can & Can’t Do”

-

Hi my family member I want to say that this post is awesome nice written and come with approximately all significant infos I would like to peer extra posts like this

-

Every time I visit your website, I’m greeted with thought-provoking content and impeccable writing. You truly have a gift for articulating complex ideas in a clear and engaging manner.

Leave a Reply